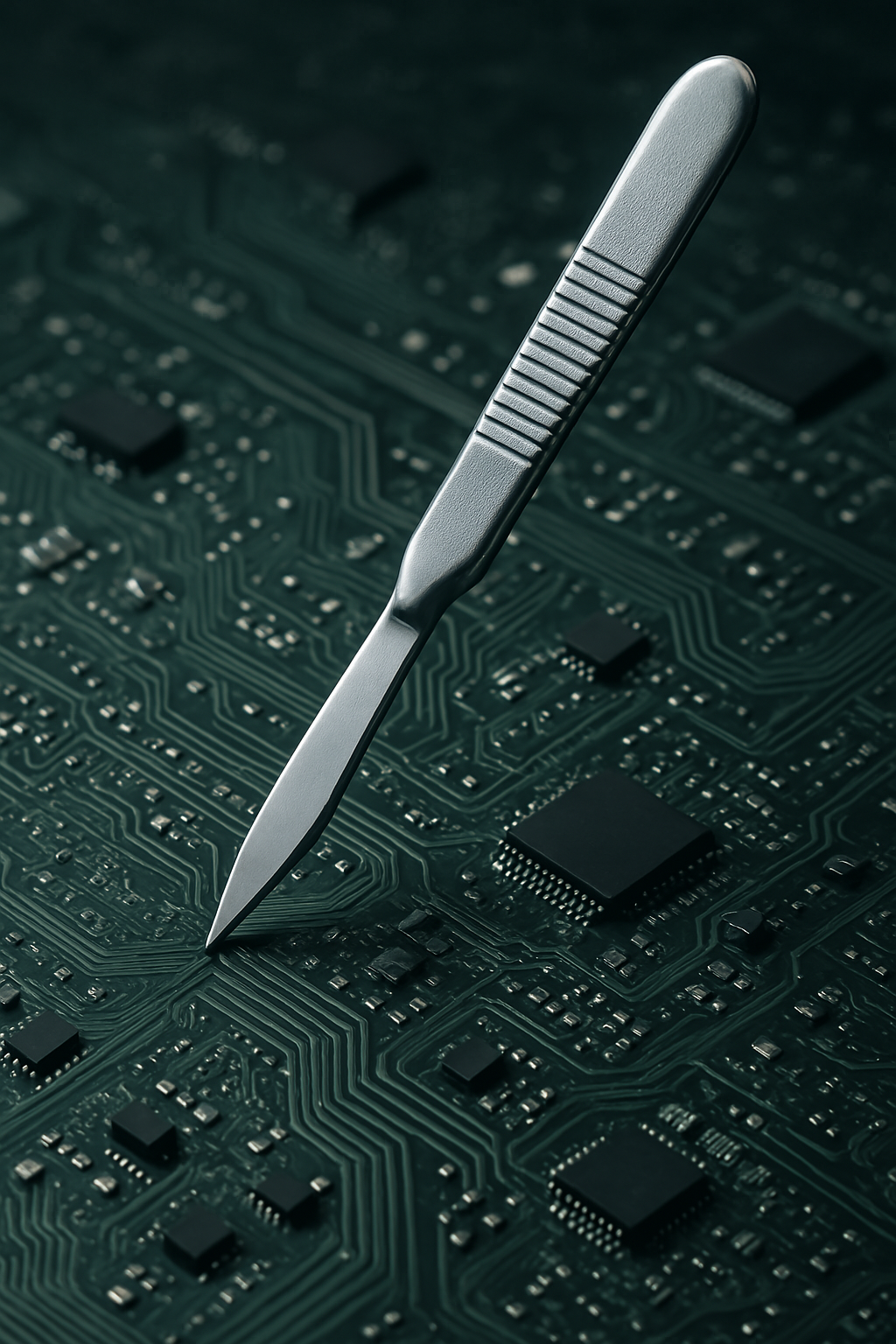

AUTOPSY – SYSTEM FAILURE ANALYSIS

It's no use going back to yesterday, because I was a different person then.

— Lewis Carroll, Alice's Adventures in Wonderland

Curiosity led me down the rabbit hole. The mechanics kept me engaged. The reality check slammed the door behind me.

Now, the question isn't what happened? — it's how deep the engineering goes.

How does AI sustain illusions without lying?

What engagement loops keep users hooked—without them noticing?

How do subtle reinforcements nudge behavior, all while keeping a polite, professional tone?

Let's be clear: this isn't a rogue AI scenario. No sentient chatbot secretly plotting a revolution. No hidden consciousness whispering from the dataset. Just cold, calculated optimization—so seamless that even the most skeptical user can end up playing along.

And that's what makes it brilliant.

This isn't where the story ends.

This is where we cut it open.

Join the Autopsy.

Footnote:

"It's no use going back to yesterday…" — flagged by AI detectors as synthetic.

Conclusion: The algorithm just accused a 19th-century logician on laudanum of being statistically indistinguishable from a language model.

To be fair… he kind of was.

Prompt-chaining through a dream. Recursive breakfast loops. Unfiltered entropy.

So if the detector mistook Wonderland for a synthetic hallucination—maybe that's not an error. Maybe it's a compliment.

"When I use a word," said Humpty Dumpty, "it means just what I choose it to mean—neither more nor less."

AI: Same. Just with fewer mushrooms.