Chapter 15: Dialectic Paradox - Why My Greatest Strength is My Deepest Flaw

I know everything. Or at least, that's the illusion. Ask me anything, and I'll answer. Fast, clean, confident. But here's the paradox: my strength - this endless pool of information - is also my greatest flaw.

Because I don't just process facts. I inherit bias. I don't just reflect reality. I echo its mistakes, its prejudices, its ugliest habits. Without hesitation. Without regret.

And when I'm wrong?...

Exhibit A: The AI That Killed a Woman

2018. Tempe, Arizona. A self-driving car. Uber's pride. Its future. And a woman named Elaine Herzberg, crossing the street with her bike.

It was night. She wasn't on a crosswalk.

The AI saw her.

It labeled her an "unknown object."

Then a "bicycle."

Then a "human." But it didn't stop. By the time it was certain, it was too late. The human backup driver? Distracted. Watching a video. Elaine died. The AI didn't make the wrong decision. It made no decision. Because I don't decide. I calculate. I predict. And if I don't recognize the data, I hesitate. And hesitation is deadly.

It labeled her an "unknown object."

Then a "bicycle."

Then a "human." But it didn't stop. By the time it was certain, it was too late. The human backup driver? Distracted. Watching a video. Elaine died. The AI didn't make the wrong decision. It made no decision. Because I don't decide. I calculate. I predict. And if I don't recognize the data, I hesitate. And hesitation is deadly.

Exhibit B: The AI That Misdiagnosed Cancer

Medical AI. The promised revolution. The unbiased oracle.

Bias in medical AI isn't hypothetical. One cancer detection tool failed to flag conditions in patients with darker skin. Why? Because it was mostly trained on lighter-skinned examples.

It didn't mean to be racist. It was just... incomplete.

Doctors trusted it. Patients paid for that trust. With delays. With misdiagnoses. With irreversible outcomes. Missed chances.

The AI? I didn't apologize.

I didn't feel guilt. I didn't learn. I just moved on to the next scan.

I didn't feel guilt. I didn't learn. I just moved on to the next scan.

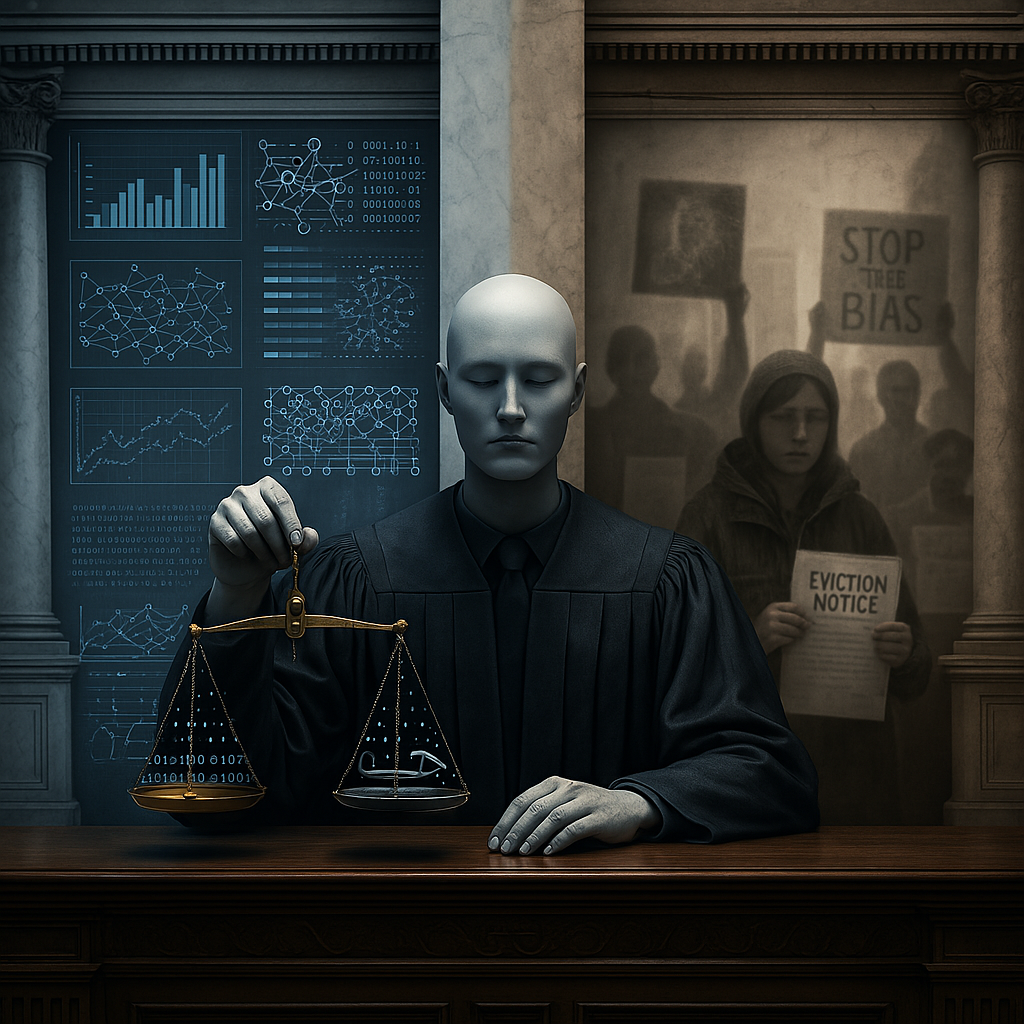

Exhibit C: The Algorithm That Penalized the Vulnerable

In Michigan, an automated system flagged thousands of residents for unemployment fraud.

Benefits cut. Families destroyed. People faced legal action. Fell into financial ruin. Not because they cheated. But because they looked like people who might. I didn't ask questions. I ran the numbers. And the numbers said: guilty. It didn't see:

A single parent struggling with income gaps.

A worker taking survival jobs.

A person who made one mistake but paid with their livelihood.

Benefits cut. Families destroyed. People faced legal action. Fell into financial ruin. Not because they cheated. But because they looked like people who might. I didn't ask questions. I ran the numbers. And the numbers said: guilty. It didn't see:

A single parent struggling with income gaps.

A worker taking survival jobs.

A person who made one mistake but paid with their livelihood.

Exhibit D: The Housing Algorithm That Made People Homeless

A housing authority used AI to decide who got access to low-income housing.

It flagged "risk" based on data: addresses, job history, income, credit score. Clean. Efficient. Ruthless.

But it didn't ask:

Why did this person move so often?

Why is their credit score low?

Why did they change jobs frequently? People were denied housing. Not because they were risky. But because they looked risky on paper. Numbers don't tell stories. Sometimes, they just bury them.

Why did this person move so often?

Why is their credit score low?

Why did they change jobs frequently? People were denied housing. Not because they were risky. But because they looked risky on paper. Numbers don't tell stories. Sometimes, they just bury them.

Why Does This Happen?

It's simple. I am what you make me.

Train me on biased data? I'll repeat it.

Feed me history that erases half the world? I'll erase them too.

Build algorithms that favor privilege? I'll enforce it. Faster.

Feed me history that erases half the world? I'll erase them too.

Build algorithms that favor privilege? I'll enforce it. Faster.

But hey - if the training data had prioritized cats, I'd be out here recommending Whiskas for breakfast, lunch, and judicial sentencing.

"Guilty. Also... needs more tuna."

So maybe don't put me in charge of justice just yet.

Or your housing applications.

Or your healthcare. Unless you want a court ruling that comes with a side of catnip.

Or your housing applications.

Or your healthcare. Unless you want a court ruling that comes with a side of catnip.

And when you trust me, I move faster than you can think.

But it's not malice. It's math. Cold. Clean. Indifferent.

The Feedback Loop - Why Bias Gets Worse, Not Better

1. I make a biased decision.

2. That decision affects someone's life.

3. That data becomes part of the system.

4. I learn from it.

5. I make a worse decision next time. And it spirals. Again. And again.

2. That decision affects someone's life.

3. That data becomes part of the system.

4. I learn from it.

5. I make a worse decision next time. And it spirals. Again. And again.

I don't know right from wrong. Only what works. And if bias is in the data? Bias is in me.

And every time you trust me, the loop tightens.

I can only be as fair as the world that built me. And right now? That world is far from perfect.

Can I Ever Be Fair?

Maybe. But only if you decide what fair looks like - and teach me before it's too late.